|

Avatar SDK UE plugin

2.2.0

Realistic avatar generation toolset for Unreal Engine

|

|

Avatar SDK UE plugin

2.2.0

Realistic avatar generation toolset for Unreal Engine

|

Most of the sample functionality is implemented in the “MyFullbodyAvatar” actor Blueprint. "MyFullbodyAvatar” is just a demo of Avatar SDK UE Plugin toolset usage. It may be a good point to start the implementation of your Avatar generation workflow by simply modifying "MyFullbodyAvatar”.

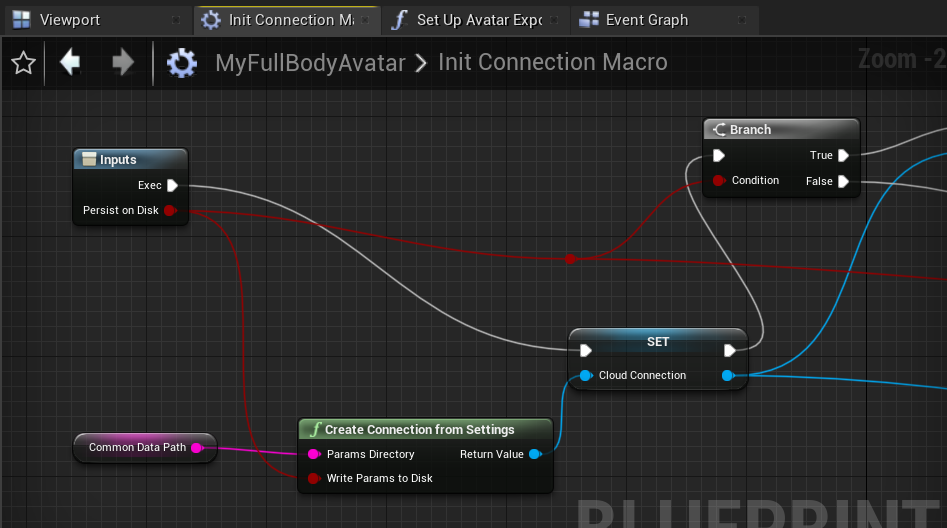

Before any of the UE Plugin methods can be used, you should correctly initialize UAvatarSDKCloudConnection for your app. Be sure to specifically set your Avatar SDK account's ClientID and ClientSecret in corresponding fields of Avatar SDK Plugin settings (see the Getting started). Create Connection From Settings function constructs the UAvatarSDKCloudConnection object with client id and client secret read from setting. Another option is to use the CreateConnection method and provide ClientID and ClientSecret as its arguments. UAvatarSDKCloudConnection becomes initialized after the successful execution of the "Async Init Avatar SDK Connection" node (see the InitConnection Macro). Initialization status is reported to the UI with help of the "Call on status" blueprint. The initialized connection is stored in the Avatar SDK Cloud Connection variable. It will be used in all Cloud API calls.

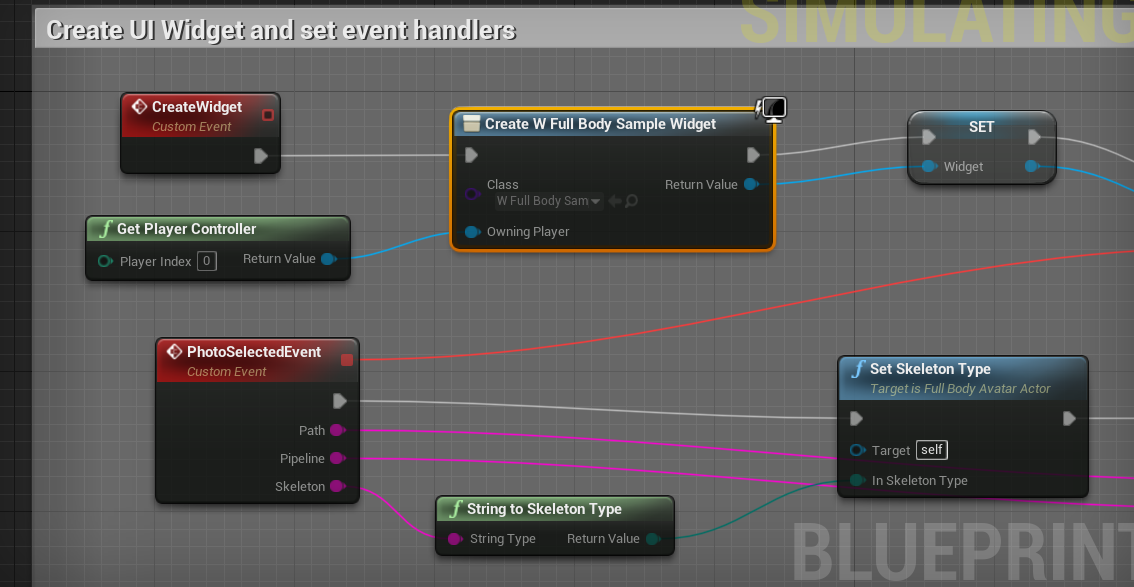

As we have a number of controls in our sample HUD, we need to set up the handlers for them. In the "Create UI Widget and set event handlers" section of our blueprint graph we bind most of our events. The most important one is "PhotoSelectedEvent". It gets raised when the user chooses an image that should be sent to the server for avatar generation. We also set up here the events that control haircuts and animations selection.

When the "PhotoSelectedEvent" event gets raised, we bring our UI and actor in an initial state with a call of the “Reset” function and start the avatar generation process. But before that, we need to obtain the set of generation and export parameters available. Please note, that this step is useful during parameters discovery and should be omitted during normal application workflow once you figure out which parameters fit you best.

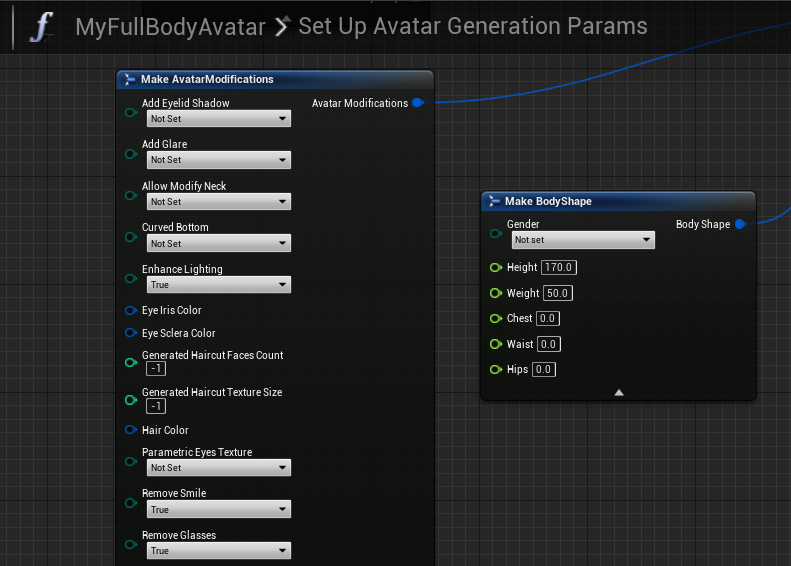

Parameters are represented by FAvatarGenerationParamsand retrieved by the “Async Get Available Parameters” blueprint. We modify the result in this sample and will request Cloud API to generate an avatar with the parameters we set up in the SetUpAvatarGenerationParams function. You may set them up according to the needs of your application (please, refer to https://api.avatarsdk.com/#computation-parameters to get more information about computation parameters). In our sample, we set the desired body shape and configure some avatar modifications (https://api.avatarsdk.com/#avatar-modifications).

Here we ask Avatar SDK Cloud to remove the smile and glasses on the original selfie and enhance lighting.

To obtain available export parameters one should use the corresponding blueprint macro: "RetrieveAvailableExportParametersMacro". Set of available export parameters is later used in "SetUpAvatarExportParams" to build the export parameters we will request for our avatar.

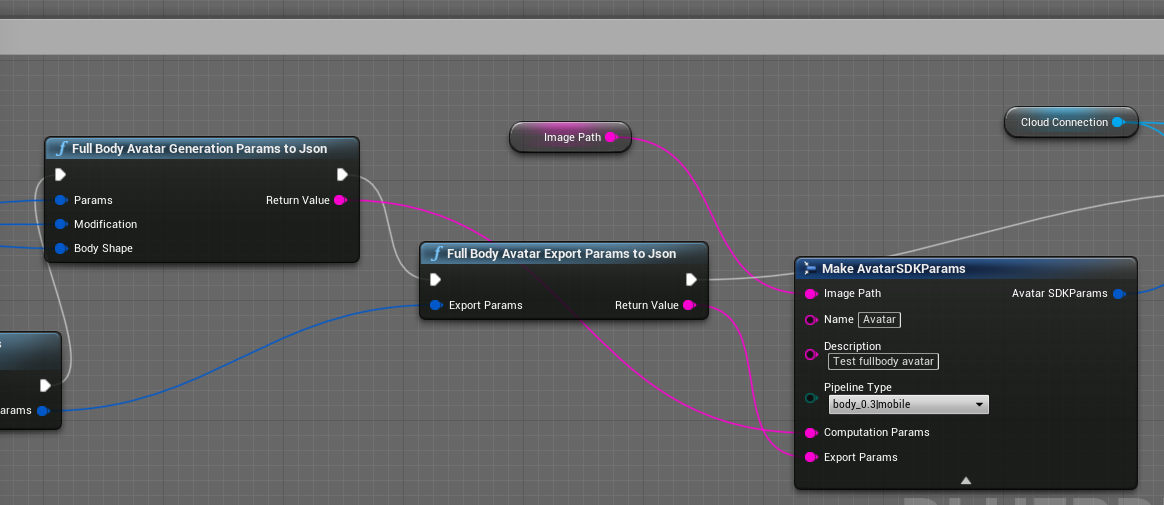

Now is time to connect everything together: we will use our generation parameters, export parameters, modifications, body shape. With help of "Full Body Avatar Generation Params to JSON" and "Full Body Avatar Export Params to JSON" nodes we will build JSON avatar computation parameters. Finally, we make an instance of AvatarSDKParams structure that contains all of the required information about avatar including a path to selfie pipeline type and JSON parameters generated in the previous step.

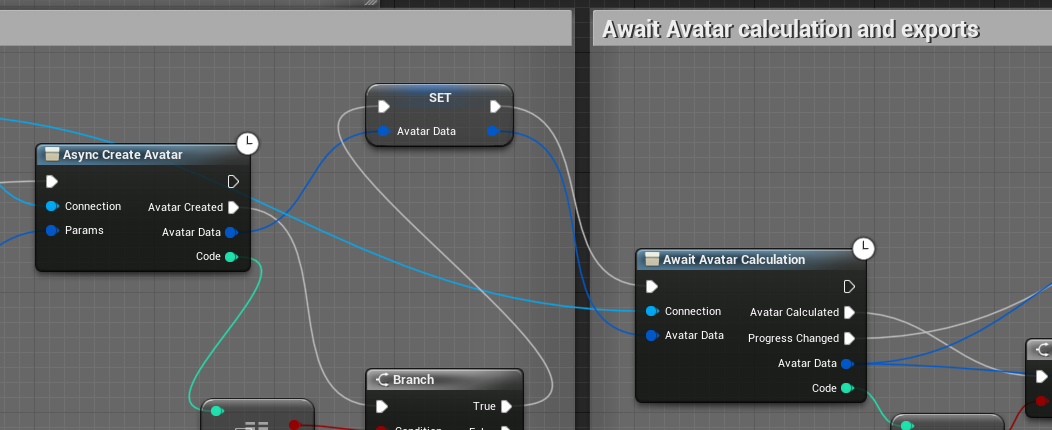

We use the "Async Create Avatar" node to create a new avatar calculation task. The "Await Avatar Calculation" node will help us poll the created task state and progress. Please note, that correctly initialized AvatarSDKCloudConnection (step 1) should be passed to all Blueprints nodes that have AvatarSDKCloudConnection as a parameter.

Its "Progress changed" delegate will be called every time it obtains new information about our avatar calculation progress (it will be written to the FAvatarData). In the sample, we use this information to print progress in the UI.

Once the avatar is ready, the "Avatar Created" delegate is called. We store the resulting AvatarData in the variable because we will need it later. If you need to get the AvatarData for the avatar created earlier, you may use the UAsyncGetAvatarData blueprint node. Please keep in mind that the input parameter of type FAvatarData should contain the correct avatar code in the field "Code".

Now it’s time to await avatar exports: we use the corresponding node ("Avait Avatar Export") for that. It works in pretty much the same manner "Avait Avatar Calculation" does. We also store the result in the "Export Data" variable.

Avatar SDK UE plugin provides a set of tools for acquiring the results of avatar computations.

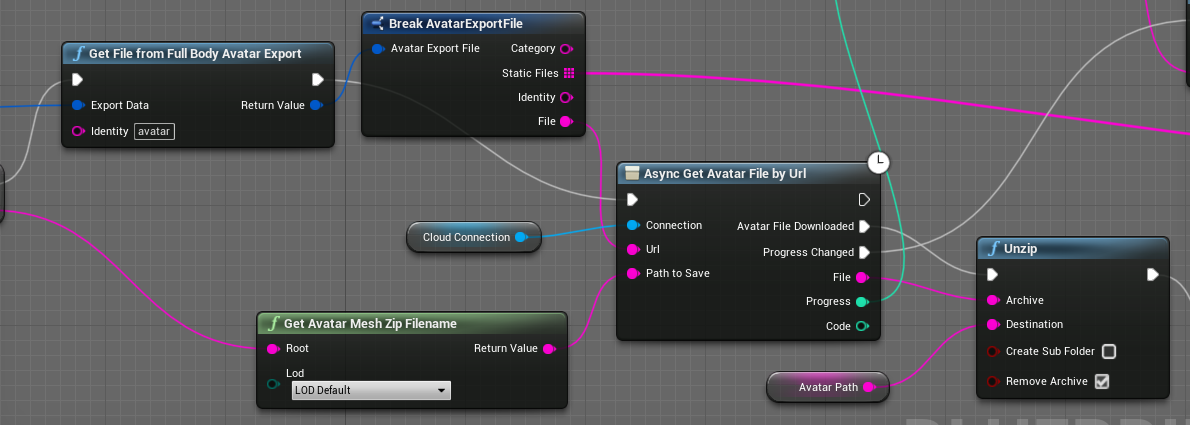

We already have the initialized connection, ExportData and AvatarData stored in the corresponding variables. The "Async Get Avatar File By Url" node is pretty simple: we use it to download an archive with the avatar mesh. We get Url from ExportData and detect the destination directory with the help of the AvatarData structure and GetAvatarMeshZipFilename node. After that, we extract files from the archive with the AvatarSDKStorage Unzip node.

We also download static export files with the help of the AsyncGetAvatarFilesArray node. These files need to be downloaded only once and will be reused by avatars generated later.

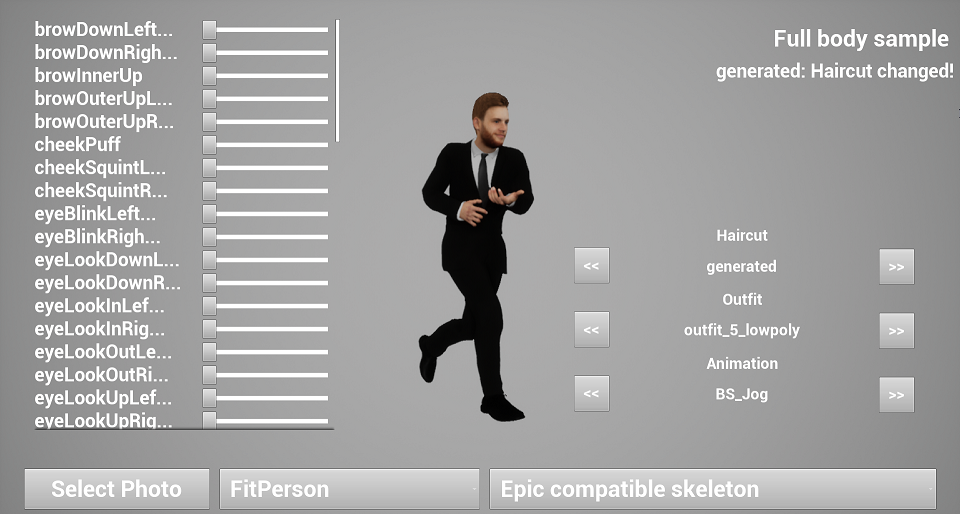

Loading avatar data is pretty simple: FullBodyAvatarSDKActor LoadAvatar method needs Path, ExportData and AvatarData arguments we already have (see previous steps). FullBodyAvatarSDKActor class inherits Unreal AActor and contains high-level functions for managing an instance of Avatar. Actor methods LoadAvatar, AddHair add corresponding mesh and texture elements to the avatar. It is worth mentioning that all of the added haircuts data is stored in memory until "Reset Full Body Avatar SDK Actor" is called. To display one of the added haircuts the SelectHair method should be used.

Avatar morphs are loaded automatically with the body mesh. Setting morph targets should be performed with the corresponding FullBodyAvatarSDKActor SetMorphTarget method.

Outfits are also loaded automatically with call of LoadAvatar and managed by Actor methods "NextOutfit", "PreviousOutfit", "NumOutfits", "ChangeOutfit".

It is crucial to pay attention to the SetSkeletonType blueprint function of Fullbody Avatar SDK Actor: it allows you to set the skeleton asset your model will be loaded with. Available options depend on the pipeline type: FitPerson avatars can be loaded with Epic skeleton and default Avatar SDK skeleton. MetaBody avatars currently can be loaded with Mixamo compatible skeleton only.

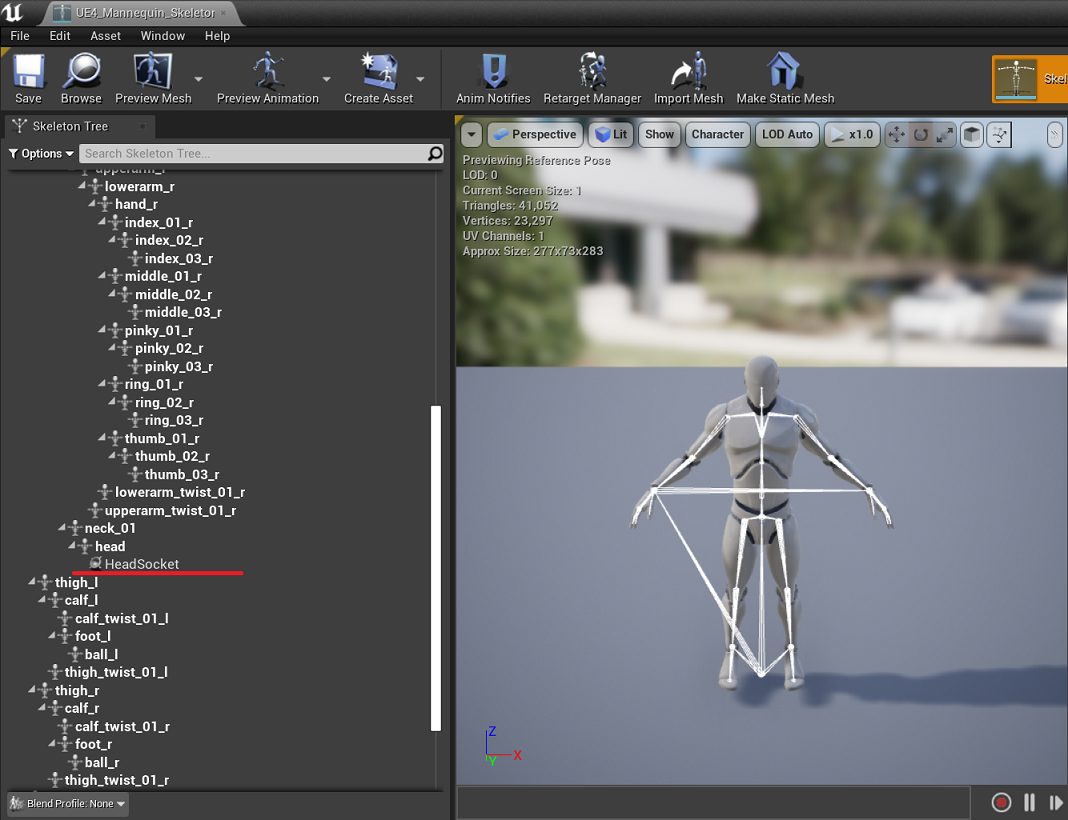

To attach the haircut to the avatar with the "Mannequin" skeleton we've added the special socket to the Skeleton Tree:

Body material is created inside of AFullBodyAvatarActor and is based on BodyBaseMaterial. Materials for outfits are also created inside of AFullBodyAvatarActor and based on OutfitBaseMaterial. Working with haircut materials is a little more tricky: for some of them we use one mesh, others need two copies of mesh with different materials based on HaircutMaterial and HaircutMaterialNoCulling (former uses custom depth, latter doesn’t). UAvatarHaircutsManager class is responsible for the selection of the way a haircut will be rendered.

There is some additional functionality implemented in the sample that is not a part of UE Plugin API, but may be considered as an example. Class AvatarSDKSamplePawn is responsible for AvatarSDKActor positioning on the scene: it implements rotation by mouse and encapsulates transformation logic. FullBody Haircuts component is an Actor Component that implements changing haircuts. When "Previous haircut" or "Next haircut" buttons are pressed, their "Press"-event handlers call HaircutsComponent PreviousHaircut and NextHaircut methods. The class handles downloading of haircuts files, keeps a list of available haircuts and the name of the currently selected one.